Rephrase and rearrange the whole content into a news article. I want you to respond only in language English. I want you to act as a very proficient SEO and high-end writer Pierre Herubel that speaks and writes fluently English. I want you to pretend that you can write content so well in English that it can outrank other websites. Make sure there is zero plagiarism.:

In the ongoing battle against the rise of convincing and widespread fake images, camera industry leaders Nikon, Sony Group, and Canon are deploying cutting-edge technology to authenticate the origin and integrity of photos.

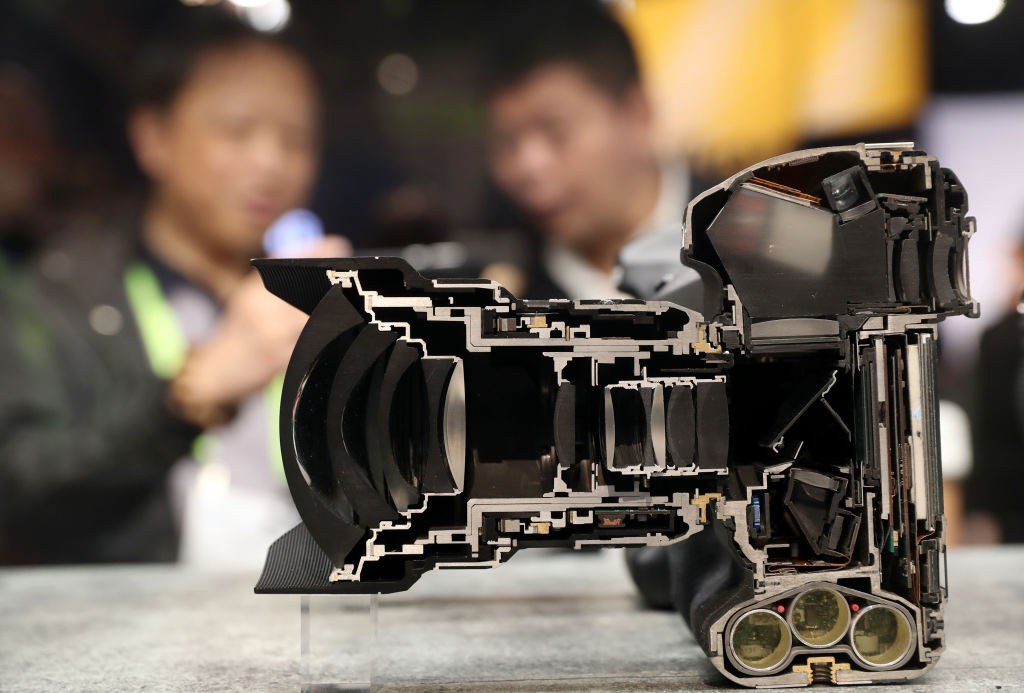

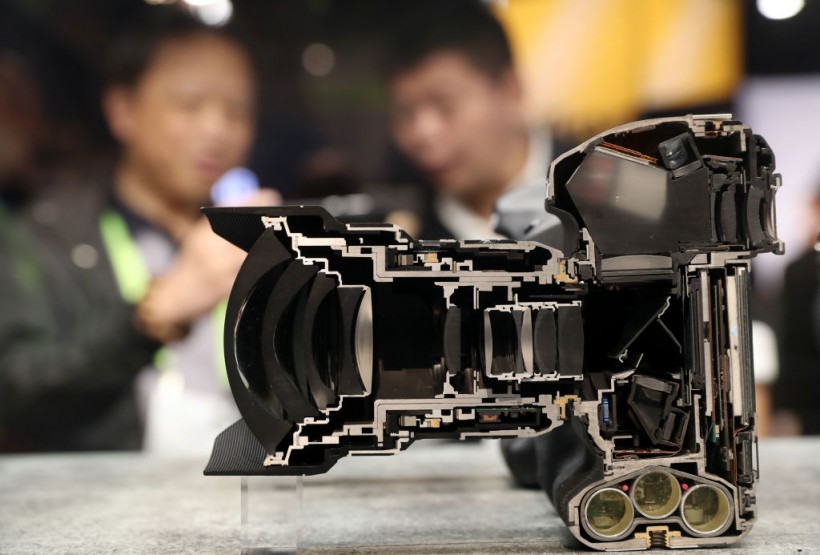

(Photo : Justin Sullivan/Getty Images)

LAS VEGAS, NEVADA – JANUARY 08: A Nikon D5 camera is shown cut in half at the Nikon booth during CES 2019 at the Las Vegas Convention Center on January 8, 2019 in Las Vegas, Nevada. CES, the world’s largest annual consumer technology trade show, runs through January 11 and features about 4,500 exhibitors.

Combatting Deepfakes

The three companies are collaboratively developing digital signatures embedded in their cameras, serving as secure proof of the image’s origin. These digital signatures will encompass crucial information such as the date, time, location, and photographer, rendering them resistant to tampering.

Interesting Engineering reported that the implementation of this technology aims to provide a valuable tool for photojournalists and professionals who rely on ensuring the credibility of their work.

Nikon plans to introduce this feature in its mirrorless cameras, while Sony and Canon will incorporate it into their professional-grade mirrorless SLR cameras.

The trio of camera industry leaders has reached a consensus on a universal standard for digital signatures, ensuring compatibility with the web-based tool Verify.

Launched through a collaboration among global news organizations, technology firms, and camera manufacturers, Verify enables free verification of image credentials. Users can easily access relevant information if an image carries a digital signature.

In case of artificial intelligence intervention in creating or altering an image, Verify promptly flags it with “No Content Credentials.”

Affecting Notable Figures

The demand for such technological solutions is evident in light of the proliferation of deepfakes featuring notable figures such as former US President Donald Trump and Japanese Prime Minister Fumio Kishida.

These incidents have underscored the growing concerns about the reliability of online content. Additionally, researchers at China’s Tsinghua University have developed a latent consistency model, a novel generative AI technology capable of producing approximately 700,000 images daily.

Also Read: Dangers of Deepfakes: AI-Generated Images of Trump’s Arrest Go Viral! How Do You Spot a Deepfake?

Several technology companies are stepping up in the fight against fake images. Google, for instance, has introduced a tool that embeds invisible digital watermarks into AI-generated images, detectable by another tool.

On the other hand, Intel has developed technology capable of analyzing changes in skin color in images, indicative of blood flow under the skin, to determine the image’s authenticity. Simultaneously, Hitachi is actively working on technology to counter online identity fraud by verifying user images.

Anticipated to be available by 2024, Nikkei Asia reported that the new camera technology is set to be released by Sony in the spring of that year, followed by Canon later in the year.

Sony is even contemplating extending the feature to videos, while Canon is working on a similar video technology. Canon has also launched an image management app designed to ascertain whether images are human-captured.

Related Article: Experts Warn AI Misinformation May Get Worse These Upcoming Elections

ⓒ 2023 TECHTIMES.com All rights reserved. Do not reproduce without permission.

I have over 10 years of experience in the cryptocurrency industry and I have been on the list of the top authors on LinkedIn for the past 5 years. I have a wealth of knowledge to share with my readers, and my goal is to help them navigate the ever-changing world of cryptocurrencies.